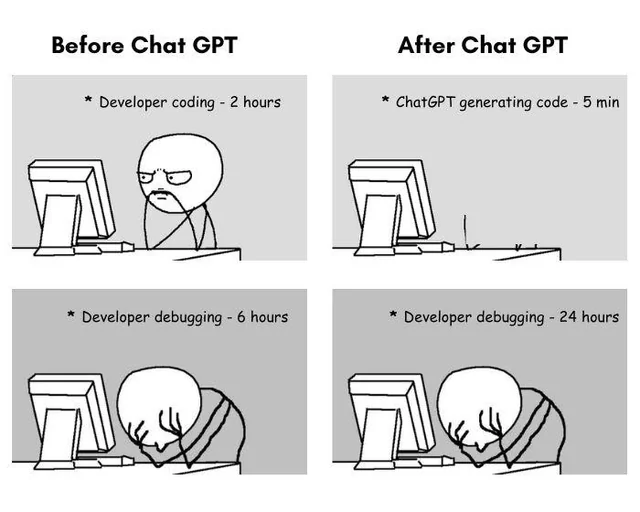

A comprehensive look at prompts to help developers with coding tasks

A good look at prompts that will help with your Vibe Coding Journey.

Prompt engineering is a bit of an art and a bit of a science – and as we’ve seen, it’s quickly becoming a must-have skill for developers working with AI code assistants. By crafting clear, context-rich prompts, you essentially teach the AI what you need, just as you would onboard a human team member or explain a problem to a peer.

Let me know how you do with these prompts.

A professional video commercial made for 50 bucks

In Brazil, the production of a professional-quality 1-minute advertising video typically incurs costs exceeding R$100,000 reais ($17,543 dollars). The commercial created for Ulianopolis City Hall in Brazil was made with VEO 3, spending only R$300 reais ($52 dollars) in VEO 3 credits. This is a major disruption to traditional commercial production, suggesting advertising and creative agencies are under threat as models start to produce high-quality, native-language AI output, underscoring the imminent shift in media production workflows.

Andrew Ng on AI agents

Andrew Ng’s recent fireside chat offered sharp insights for AI builders and businesses, emphasizing thinking about “agenticness” as a spectrum rather than a binary, and highlighting that current business opportunities often involve translating linear workflows into agentic ones. He underscored the critical importance of evaluation (evals) for agent builders, alongside strong LLM understanding, broad tool knowledge, and adaptability, while also pointing to underrated areas like the voice stack and AI-assisted coding. Ng also noted that while Model-driven Coordinated Programming (MCP) is promising for standardizing agent connections, true agent-to-agent communication is still nascent, and for startups, speed and technical knowledge are the top predictors of success.

ChatGPT enables MCP connectivity

Connectors in ChatGPT allow users to securely connect to third-party applications like Google Drive and GitHub, enabling search, data retrieval, and content referencing within the chat. Depending on the plan and role, users can perform quick searches, deep research, or sync knowledge sources for faster and more accurate responses. Some of the connectors like the gmail server offer less functionality than the gmail MCP from Claude. As the connectors functionality is only offered on the cloud version it cannot connect to locally running MCP servers natively.

DeepSeek R1-0528 & QWEN3-8B

The newest update to the flagship reasoning model from Chinese AI startup DeepSeek, released on May 28, 2025. This update is making significant waves in the AI community, positioning DeepSeek as a formidable competitor to industry leaders like OpenAI and Google.It enhances performance in mathematics, programming, and logical reasoning, with notable improvements in benchmark tests. For instance, its accuracy on the AIME 2025 test increased from 70% to 87.5%, and its pass rate on LiveCodeBench improved from 63.5% to 73.3%. Additionally, the model’s performance on the “Humanity’s Last Exam” benchmark more than doubled, rising from 8.5% to 17.7% .

DeepSeek has distilled its large R1-0528 model into a much smaller version (Qwen3-8B), which matches or exceeds the performance of much larger models on certain benchmarks. This smaller model can run on a single GPU, making advanced AI more accessible to researchers and developers with limited hardware

Perplexity AI launches Perplexity Labs

Perplexity Labs is a powerful AI-driven tool designed to help users create comprehensive projects such as reports, spreadsheets, dashboards, and simple interactive web applications — all from a single prompt. It is available to Perplexity Pro subscribers on the web, iOS, and Android, with support for Mac and Windows apps planned soon.

Perplexity Labs is suitable for a wide range of personal and professional tasks, such as:

- Market research and financial analysis (e.g., building trading strategy dashboards)

- Business reporting and data visualization

- Academic research summaries

- Personal planning (meal plans, travel itineraries)

- Building mini web apps for presentations or data exploration

On Coding with LLM’s - My AI Skeptic Friends Are All Nuts

This essay foretells the future of coding with AI agents . It reminds me of Autopilot on an airplane or the Tesla Self driving AI where the humans job is to monitor and tweak the inputs to ensure a getting to the destination.

People coding with LLMs today use agents. Agents get to poke around your codebase on their own. They author files directly. They run tools. They compile code, run tests, and iterate on the results. They also:

- pull in arbitrary code from the tree, or from other trees online, into their context windows,

- run standard Unix tools to navigate the tree and extract information,

- interact with Git,

- run existing tooling, like linters, formatters, and model checkers, and

- make essentially arbitrary tool calls (that you set up) through MCP.

Tenniix - Your AI powered tennis partner & coach

T-Apex has introduced Tenniix, an AI-powered robotic tennis coach that can serve at speeds of up to 75 mph and deliver 1,000 customized drills tailored to players’ skill levels. Unlike traditional ball machines, this 15-pound portable robot can move around the court and execute a range of shots, including baseline groundstrokes and high lobs.

Humanoid Robots from Hugginface

Hugging Face, an AI development platform, has expanded its robotics efforts by unveiling two new open-source humanoid robots: HopeJR and Reachy Mini. HopeJR is a full-sized humanoid robot with 66 degrees of freedom, capable of walking and moving its arms. Reachy Mini is a desktop unit designed for testing AI applications, able to move its head, talk, and listen. Both robots are open-source and intended to be affordable to prevent the robotics field from being dominated by a few large companies with “black-box systems.” HopeJR is estimated to cost around $3,000, while Reachy Mini will be approximately $250-$300. Hugging Face aims to begin shipping the first units of these robots by the end of 2025, with a waitlist currently open. This initiative was partly made possible by Hugging Face’s acquisition of humanoid robotics startup Pollen Robotics in April 2025.

Robot Boxing Matches are here

The first ever kickboxing tournament exclusively featuring humanoid robots. These robots, designed by Unitree, were remotely operated by human beings and competed for the top spot. The latest example of China’s growing focus on robotic exhibitions, following last month’s half marathon.

VS Code AI Editor version to be open source.

We believe that the future of code editors should be open and powered by AI. For the last decade, VS Code has been one of the most successful OSS projects on GitHub. We are grateful for our vibrant community of contributors and users who choose VS Code because it is open source. As AI becomes core to the developer experience in VS Code, we intend to stay true to our founding development principles: open, collaborative, and community-driven.

Personalized (AI) Asset Creator

Generated Assets, a tool developed by Public Holdings, Inc., utilizes artificial intelligence to assist investors in creating, refining, and sharing investment ideas. By leveraging AI, Generated Assets empowers users to transform any investment concept into an asset. It offers features such as screening stock listings, allocating weighting, and comparing past performance against the S&P 500. This comprehensive tool serves as an educational resource, providing investors with enhanced insights and market data to explore and evaluate their investment thesis.

GPT-4.1 and GPT-4.1 mini released

OpenAI is rolling out GPT-4.1 and GPT-4.1 mini to ChatGPT users, following their initial release in the API. GPT-4.1, a specialized model, is now accessible to Plus, Pro, and Team subscribers via the “more models” dropdown, excelling particularly in coding and precise instruction following with the same rate limits as GPT-4o. Simultaneously, the faster and more capable GPT-4.1 mini is replacing GPT-4o mini for all users, serving as the fallback model for free users once their GPT-4o limits are reached, and offers significant improvements in instruction following, coding, and overall intelligence, also maintaining the same rate limits.

Insurance coverage for AI mishaps

Lloyd’s of London, through the startup Armilla, has launched a new insurance product aimed at companies facing risks from AI-related malfunctions, such as errors and hallucinations in systems like chatbots. This development comes as the adoption of AI increases, alongside concerns about the potential for costly mistakes and reputational damage caused by underperforming AI that may confidently provide incorrect information. The insurance policies are designed to cover legal costs if a company is sued due to harm caused by its AI product’s technical failures. The introduction of this insurance underscores the real-world technical challenges in building and deploying reliable and consistently predictable AI systems, particularly regarding accountability when AI errors occur.

AlphaEvolve - An agent for designing advanced algorithms

Google DeepMind’s AlphaEvolve project uses large language models to invent algorithms beyond human expertise. The project combines coding skills, algorithm testing, and an evolutionary method to produce new designs. AlphaEvolve has generated more efficient algorithms for various computations and real-world problems, demonstrating the potential for AI to come up with novel ideas through experimentation and evaluation.

OpenAI Healthbench - Evaluating your AI Doctor

HealthBench, a new benchmark designed to provide a more meaningful, trustworthy, and unsaturated evaluation of AI systems, particularly large language models, in realistic health scenarios. Developed in collaboration with 262 physicians, HealthBench comprises 5,000 diverse, multi-turn, and multilingual health conversations simulating interactions between AI and users or clinicians. A key technical aspect is its rubric-based evaluation system, where physician-authored criteria with weighted points are used to grade model responses, with assessment performed by a model-based grader (GPT-4.1). This rigorous approach aims to overcome the limitations of existing health evaluations and provide a robust benchmark to drive continuous improvement in AI for health applications. Key Points for a Technical Audience

- Large-Scale, Diverse Dataset: HealthBench provides a dataset of 5,000 realistic, multi-turn, and multilingual health conversations, capturing a wide range of medical specialties, contexts, and user/provider personas, offering a rich testbed for AI models.

- Fine-Grained Rubric Evaluation: The benchmark utilizes a detailed rubric-based evaluation system with 48,562 unique, physician-written criteria, allowing for a granular assessment of model responses against specific medical standards and priorities.

- Model-Based Grading: Evaluation of model responses against the extensive rubric criteria is performed by a model-based grader (GPT-4.1), providing an automated yet detailed assessment mechanism.

- Designed for Model Improvement: HealthBench is intentionally designed to be “unsaturated,” meaning current state-of-the-art models do not achieve perfect scores, providing significant room and clear signals for model developers to identify weaknesses and improve performance.

- Focus on Realistic Interactions: The benchmark moves beyond simple question-answering to evaluate AI capabilities in more complex, realistic health conversations, assessing aspects like context-seeking, response depth, expertise-tailored communication, and handling uncertainty.

Continuous Thought Machines

Sakana AI introduces the Continuous Thought Machine (CTM), a novel artificial neural network architecture inspired by biological brains that utilizes the synchronization of neuron dynamics and timing information for its reasoning process. Unlike traditional AI models, the CTM “thinks” through tasks in a step-by-step manner, offering interpretability into its decision-making. This approach has demonstrated improvements in problem-solving and efficiency across various tasks, exhibiting emergent, human-like behaviors such as interpretable maze solving and attentive image processing. The CTM represents an effort to integrate principles from neuroscience into artificial intelligence to unlock new capabilities and enhance efficiency.

Key Points

- Novel AI Architecture: Introduction of the Continuous Thought Machine (CTM) presents a new biologically inspired AI approach that could offer different capabilities compared to current deep learning models used in fintech.

- Enhanced Interpretability: The CTM’s step-by-step “thinking” process offers a level of interpretability into how decisions are reached, which is crucial for explainability and regulatory compliance in financial applications like credit scoring, fraud detection, and risk assessment.

- Potential for Improved Efficiency: The model’s ability to adapt its processing time based on task complexity suggests potential for greater computational efficiency in handling varying workloads typical in fintech operations.

- Learning Complex Patterns: The CTM’s capacity for learning complex, emergent behaviors through neuron synchronization could be valuable for identifying sophisticated, non-obvious patterns in financial data, such as money laundering or novel fraud schemes.

- Bridging AI and Neuroscience: The research explores integrating brain-like mechanisms into AI, potentially leading to more nuanced and adaptive decision-making systems relevant for complex financial modeling and automated trading strategies.

Alibaba’s ‘ZeroSearch’ lets AI learn to google itself

Alibaba has unveiled “ZeroSearch,” a new AI training method that allows large language models (LLMs) to simulate searching the web without relying on commercial search engine APIs like Google. This approach uses reinforcement learning to teach AI’s to retrieve relevant information by generating simulated search results, leveraging the LLM’s pre-existing world knowledge. The method drastically reduces the cost and unpredictability of training AI assistants for information retrieval, providing developers with more control and making advanced AI development accessible to smaller companies. In trials, ZeroSearch matched or outperformed models trained with real search engines, and Alibaba has open-sourced the technology for broader adoption.

Scaling RL training to reach AGI

OpenAI researcher Dan Roberts at AI Ascent 2025 reveals how reasoning capabilities in AI are evolving, why test-time compute is revolutionizing the field, and the controversial shift to prioritizing reinforcement learning over pre-training.